Today, we’ll discuss about the essential elements of a neural network design. Knowing about each one of them will help to improve your understanding of how a neural network is designed. All of these elements have a unique job and work together as a team. Let’s have a look at them one by one.

Neurons

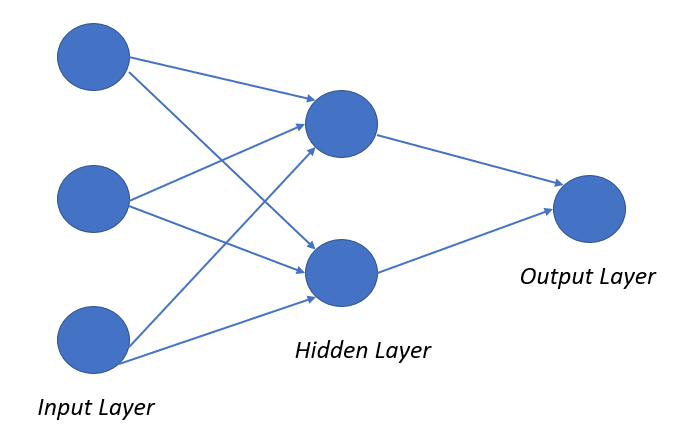

Neurons are the most fundamental unit of a neural network. In the diagram below, the neurons are represented by circles. They are inspired from the working of a human brain. Each neuron takes some input information, do some maths and then decides whether to pass that information to the next neuron or not. The neurons are connected to each other forming a communication channel to send information to each other.

Layers

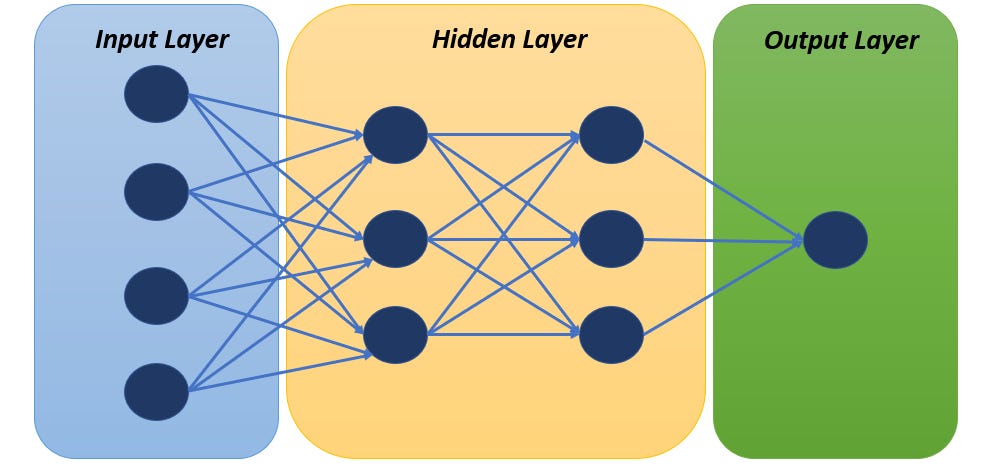

Layers act as another communication channel in the design of a neural network. There are mainly three types of layers:

Input Layer

This is the first layer in a neural network. It acts like the eyes of your neural network. It receives the initial input data. The number of neurons (nodes) in this layer is equal to the dimensionality (number of features) of input data.

Hidden Layer

This is an intermediate layer between input and output layer. It acts like the brain of your neural network. It receives input data from input layer, do some mathematical calculation and then send the information to the next layer. There can be multiple hidden layers in a neural network which work together to recognize patterns in the data.

Output Layer

This is the last layer in a neural network. It acts like the mouth of your neural network. It provides the final answer or prediction.

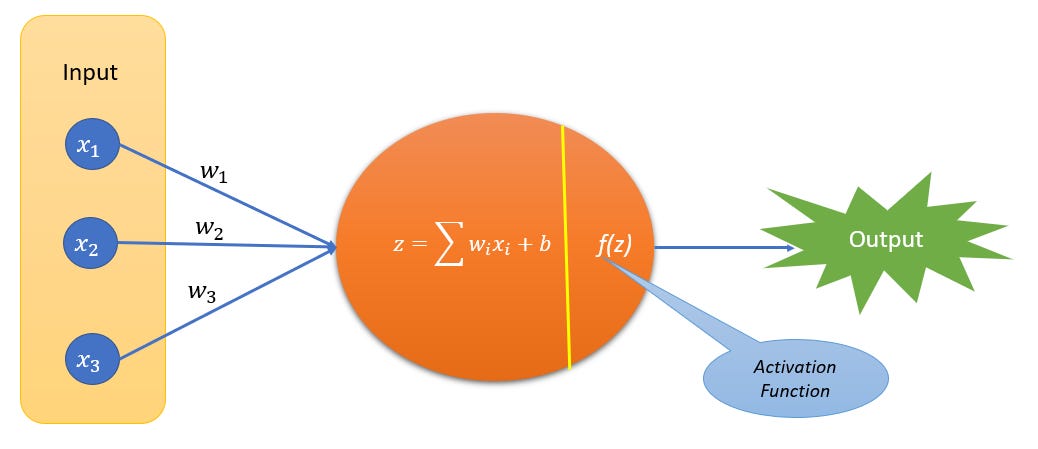

Activation Functions

An activation function introduces non-linearity and helps neural network to capture non-linear complex patterns in the data. It takes the input data, performs some mathematical calculations and contributes in the final results.

In the absence of an activation function, a neural network behaves like a linear regression model only and fails to capture non-linear relationships which limits its application to complex problems like NLP, Computer Vision etc. Most commonly used activation functions are Sigmoid, Tanh, ReLU etc.

Parameters

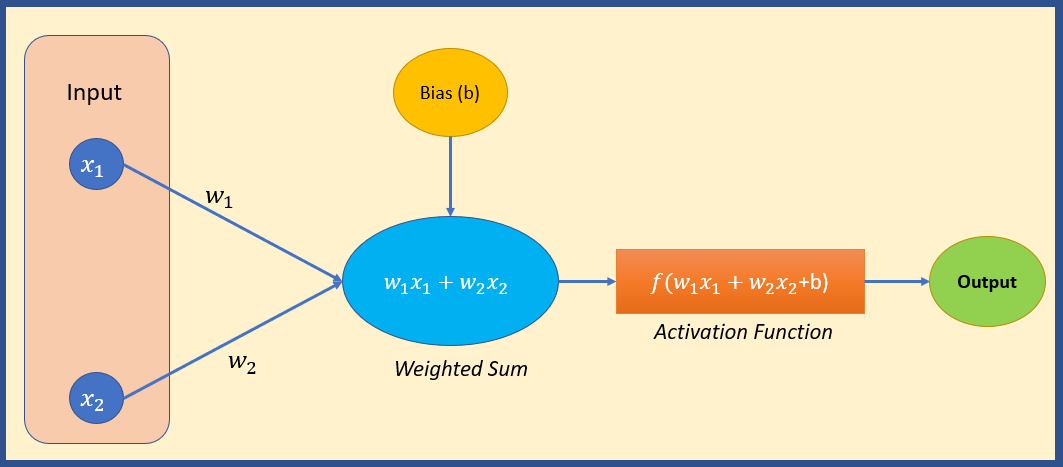

Parameters play an important role in neural networks because they determine how the neural network learns and make predictions. Their value is adjusted during the training process to minimize error while making predictions. A neural network has two types of parameters: weights and biases.

In the diagram below, weights are represented as w1 and w2 and bias is represented as b respectively.

Weights

Weights determine the strength of connection between neurons. In other words, it determines how much influence one neuron has on the other neuron. In neural networks, the information is transmitted from a sequence of one neuron to another to arrive at a final result. So, this connection parameter plays an important role in this communication channel.

Biases

Biases are additional parameters in each layer which help the model to fit the data better by shifting the activation function. They can also be related to the intercept value in a linear regression model. Even if all the weights are zero, the model can still provide some prediction due to the presence of this bias parameter.

Loss Function

Loss function measures the difference between the actual values and the predicted values. Basically, a loss function is an indicator of model’s performance. A high loss function value means model’s performance is bad and a low loss function value means model’s performance is good.

The main objective of the training process is to minimize this loss function as much as possible in order to make accurate predictions.

Depending upon the problem at hand, different types of loss functions are used. Most common of them are Mean Squared Error (MSE) for regression tasks, Cross-Entropy Loss for classification tasks etc.

Optimization Algorithm

Optimization algorithms are used to minimize the loss function by iteratively adjusting the values of parameters (weights and biases). The process involves calculating the gradient of the loss function w.r.t. each parameter and then updating the parameters in the direction that minimizes the loss function.

Most commonly used optimization algorithms in neural networks are Stochastic Gradient Descent (SGD), SGD with Momentum, Adam (Adaptive Moment Estimation), AdaGrad, RMSprop etc.

Hyperparameters

Hyperparameters are the external configurations that control the training process, help to find the optimal value of the parameters and influence the overall performance of the model. Value of hyperparameters needs to be carefully chosen for efficient model training. Some of the important hyperparameters are given below:

Learning Rate

It controls the step size at which model’s parameters are adjusted. If the learning rate is too small, the convergence can be very slow. If the learning rate is too large, it may overshoot the optimal solution.

Batch Size

It is the number of training samples used in one iteration of the optimization algorithm to update model’s parameters.

Number of Epochs

It indicates the number of times the entire training dataset is passed through the neural network. Too few epochs may lead to underfitting and too many epochs may lead to overfitting.

Number of Layers

It decides the depth of the neural network. More layers help to capture complex patterns in the data, but it comes at the cost of increased computational cost and the risk of overfitting.

Evaluation Metrics

Evaluation metrics are used to assess model’s performance on validation and test sets once the training phase is complete. Based on the problem at hand, different types of evaluation metrics are used. Most commonly used evaluation metrics are Mean Squared Error (MSE), Accuracy, Precision, Recall, F1-Score etc.

Was this helpful?

Curious about a specific AI/ML topic? Let me know in comments.

Also, please share your feedbacks and suggestions. That will help me keep going. Even a “like” on my posts will tell me that my posts are helpful to you.

See you next Friday!

-Kavita

Quote of the day

"It does not matter how slowly you go as long as you do not stop."-Confucius

P.S. Let’s grow our tribe. Know someone who is curious to dive into ML and AI? Share this newsletter with them and invite them to be a part of this exciting learning journey.

What an amazing article. Thanks for sharing. It is very helpful if you are at the initial stage of learning this complex subject.