Difference between Normalization and Standardization! #17

They are not the same!

If you are preparing for interviews in the domain of Data Science and Machine Learning, then this topic is very important and shouldn’t be skipped.

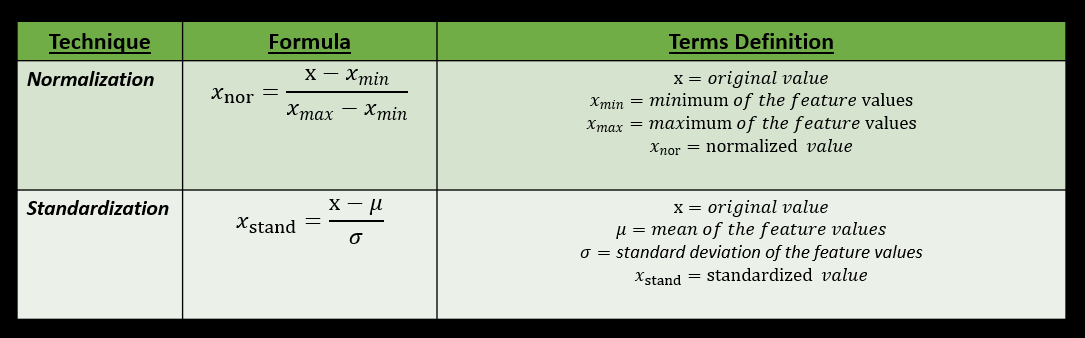

Normalization and Standardization are data preprocessing techniques and come under the category of feature scaling methods. These terms are often used interchangeably, but actually, they are not the same. They have different working strategies and different requirements for application.

In case, you want to have a quick idea on what are feature scaling methods in machine learning, check out the link below:

https://kavitagupta.substack.com/p/feature-scaling-in-machine-learning

Normalization

It is often referred as Min-Max scaling as it makes use of minimum and maximum values of the feature for transforming or rescaling the values.

This technique rescales the feature values into a fixed range usually in [0,1], [-1,1].

Best to use when:

-The minimum and maximum value of the feature are known.

-The feature distribution is unknown or is not Gaussian (Normal).

-The rescaled values need to be in a fixed range.

-You want input features to be on a common scale. For example, in Neural Networks where it can help to converge the gradient descent optimization algorithm converge faster if the features are on the same scale.

Standardization

It is also known as Z-score Normalization.

It rescales the feature values in a way that their mean is 0 and standard deviation is 1 (properties of a standard normal distribution).

Best to use when:

-The mean and standard deviation of the feature values are known.

- The feature distribution is assumed to be Gaussian (Normal).

-The rescaled values doesn’t need to be in a fixed range.

-When the input features need to be centered around mean.

Would you like to add anything to the list?

Why is it important to know the difference between Normalization and Standardization?

It helps you make informed decisions regarding data preprocessing when you are using a particular dataset and a particular machine learning model. If this difference is ignored, it can lead to faulty predictions and unreliable results.

Do you agree?

Sharing two references which explains the concept of standardization and normalization with coding examples.

https://machinelearningmastery.com/standardscaler-and-minmaxscaler-transforms-in-python/

https://www.analyticsvidhya.com/blog/2020/04/feature-scaling-machine-learning-normalization-standardization/

Are they helpful?

Curious about a specific AI/ML topic? Let me know in comments.

Also, please share your feedbacks and suggestions. That will help me keep going. Even a “like” on my posts will tell me that my posts are helpful to you.

See you soon!

-Kavita

P.S. Let’s grow our tribe. Know someone who is curious to dive into ML and AI? Share this newsletter with them and invite them to be a part of this exciting learning journey.

Appreciate this 👌

Waiting for the code example. Its a very interesting topic that could be applied for all e-commerce sellings when you have a huge portfolio and want to increase your profit