Epochs and Iterations in Neural Networks! #12

They are not the same!

In this newsletter, we’ll understand the difference between epochs and iterations with the help of a real-life example and a numerical example.

Real-Life Example: Preparing for an exam

During exam preparations, you go through the same study material multiple times or you do revision multiple times to make sure you have understood the concepts well.

Since this example seems familiar, we’ll use it to understand iterations and epochs.

Imagine, you are preparing for an important exam. The study material contains 10 chapters. Your objective is to go through this entire study material multiple times to strengthen your understanding of the concepts.

Epoch

When you go through the entire study material (10 chapters) once, it is called one epoch. When you go through the entire study material n times, it is called n epochs.

Iteration

It will be a little hectic or unproductive to go through all the 10 chapters in one sitting. So, you plan your schedule in a way that you finish 2 chapters in one sitting and then take a short break to recharge yourself.

With this schedule, you’ll need 5 sittings to finish the entire study material once. Each time you finish 2 chapters, that’s one iteration. So, you’ll need 5 sittings or iterations to complete one epoch.

If you want to go through the entire study material n times or want to complete n epochs, you’ll need 5*n sittings or iterations.

Let me know if this example was helpful.

Numerical Example: Training a Neural Network

Suppose, you have a training dataset of 3000 samples. Instead of processing the entire dataset all at once, you divide the dataset into smaller subsets called batches and perform training on these batches one by one. The number of training samples in a batch is called the batch size. The minimum batch size can be 1 and the maximum batch size can be the size of the training dataset.

Epoch

One complete pass through the entire training dataset once is called an epoch.

Iteration

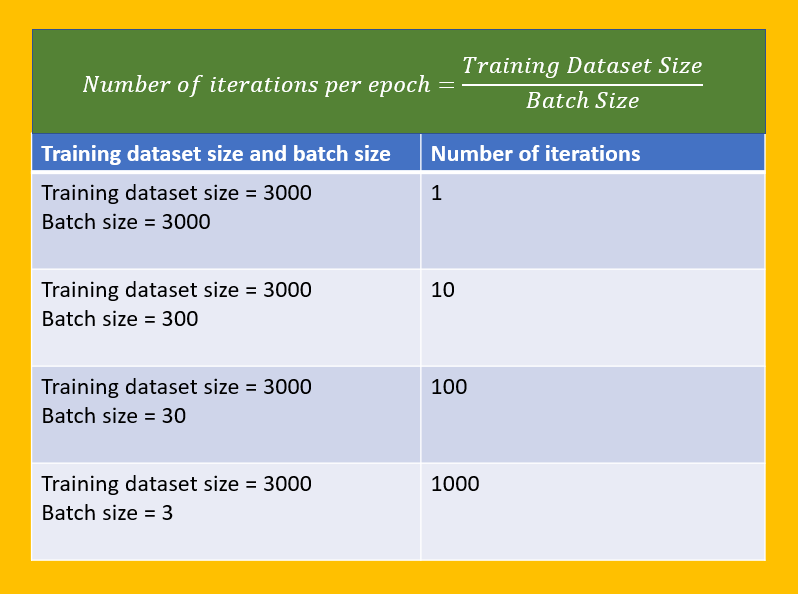

The processing of one batch at a time to update model parameters is called an iteration. The number of iterations per epoch is the number of batches required to cover the entire training dataset.

The following table tells how the number of iterations per epoch changes with the change in batch size.

Was this example helpful?

In summary, an iteration is a parameters update step in an epoch.

An epoch is made up of one or more iterations covering the entire training dataset once for model training.

Curious about a specific AI/ML topic? Let me know in comments.

Also, please share your feedbacks and suggestions. That will help me keep going. Even a “like” on my posts will tell me that my posts are helpful to you.

See you next Friday!

-Kavita

Quote of the day

“Develop a passion for learning. If you do, you will never cease to grow.” — Anthony J. D’Angelo

P.S. Let’s grow our tribe. Know someone who is curious to dive into ML and AI? Share this newsletter with them and invite them to be a part of this exciting learning journey.

Very nice explanation and example shared.

This is really good, always helps to have an intuitive explanation!